INSIGHT - 8 min read

What we learned operationalizing Responsible AI with Model Edge at PwC

"Actions speak louder than words."

Recent advancements in generative AI technologies have accelerated its integration into our daily lives at work and at home. As a result, the concept of Responsible AI (RAI) — practices to manage the risks associated with AI that can preserve value and engender safety and trust — has gained critical importance. While AI and its risks have been a focus of data science and AI teams for years, GenAI use outside of these teams, including by contractors and vendors and within software, has forced the regulatory landscape in the US and globally to rapidly mature. New frameworks and guidance hypothesizing how to implement RAI are now released at a record pace.

We've prioritized RAI for years at PwC and it’s at the core of our AI implementation principles. To augment the AI risk management processes and guidance our AI governance teams were creating, we decided we needed to incorporate tooling into our processes to support them at scale. One of our tasks, in our effort to scale: Build a Responsible AI process into Model Edge, a PwC product to govern and validate models across our firm.

Throughout this journey, we discovered that actions speak louder than words, and implementing Responsible AI firmwide, not just as part of data science and Model Risk Management (MRM) functions, can be no easy task. Model Edge has enabled us to begin to build a digital inventory that tracks models from inception to design and through testing to implementation. It also allows us to capture updates and approvals with an audit trail to meet evolving industry and regulatory standards.

The results were well worth our efforts, as we have successfully assessed and documented our risk and compliance GenAI models in Model Edge. Since then, we’ve launched a growing array of domain-specific generative AI models to our clients, with trust serving as our guiding principle.

Our process of operationalizing Responsible AI quickly shifted from theoretical discussions about primary and secondary risks to practical steps for managing the risks of our products’ highest risk models. We identified key AI system stages that required risk management, such as:

Conducting risk assessments for models and assets to capture their unique risk profile across 6 key risk domains: model risks, data risks, infrastructure risks, use risks, legal and compliance risks, and process risks.

Capturing essential information in our model inventory

Integrating these concepts into risk-based testing and performance monitoring approaches in our AI systems

Establishing a balance between decreasing friction and managing risk to encourage adoption.

The AI risk assessment

As we delved into the risk assessment and tiering process, we realized it had to go beyond our previous approaches for statistical machine-learning and other traditional models. To understand the risks associated with generative AI models, we had to consider areas beyond the models themselves. This included specific risks related to data privacy, data usage, cybersecurity, external users, explainability and transparency in development and updates, and emerging regulations.

As a result, we had to make adjustments to our risk-tiering methodology, such as determining which controls to use with Chat Generative Pre-trained Transformer (ChatGPT) models, identifying the unique risks and controls for open-source models, and figuring out how to measure incremental risk for domain-specific use cases built on top of foundational GPT models. The goal: Understand the inherent and residual risks that remain after controls are applied.

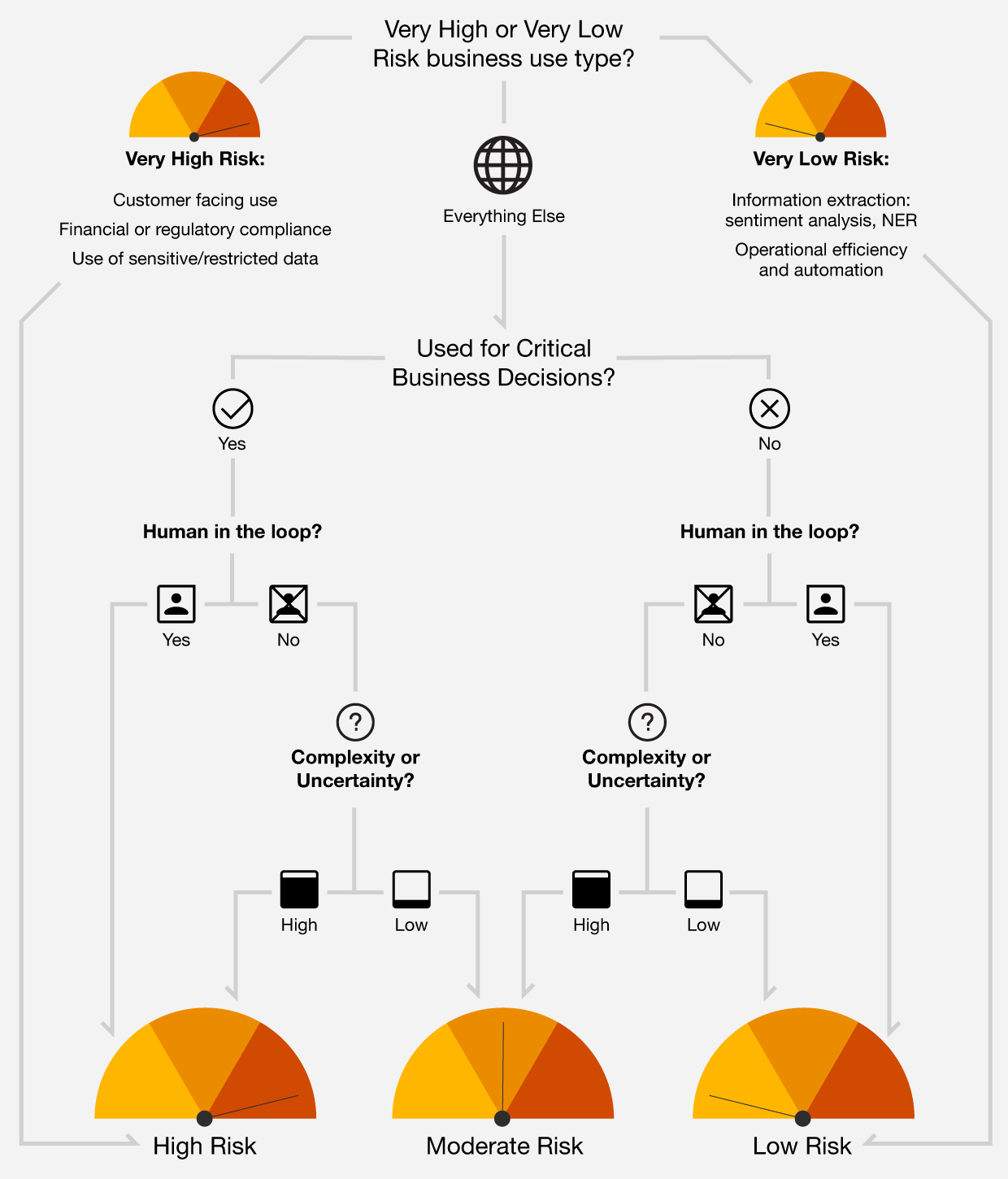

Model Edge helps streamline the process through a triage questionnaire, guiding the user to determine which risks are elevated for a particular model and use case. For example, privacy and compliance risks critical to customer-facing chatbots can be lower risk when chatbots are designed for internal use only. Our AI risk-rating methodology measures risk using these key factors:

Business use type: Recognizes certain uses are inherently very risky (e.g., systems that are client-facing, directly impact consumers or leverage sensitive consumer data)

AI system importance/materiality: Assesses the level of potential financial, societal or reputational impact of the AI system

Complexity and uncertainty: Evaluates the likelihood of risk due to inherent complexity, lack of transparency or explainability, potential for overfitting where overly specialized models struggle with new data, lack of human output review and other AI system characteristics.

A decision tree can help convert the complex multidimensional AI risk rating framework into a transparent, explainable, and simple series of yes/no and high/low responses, as illustrated in the following example.

Figure 1: Illustrative risk tiering framework informed by priority risk categories and associated impact

Developing an inventory framework

We went through numerous inventory framework iterations to capture key risk data useful to both risk specialists and executives. We defined the taxonomy of assets (e.g., non-models and model systems) and the models embedded within those assets, some of which had many-to-many relationships, in our data model. An effectively designed model and asset inventory helps connect the dots between risks, systems, and their owners across multiple stakeholders in the organization, including the Chief Information Security Officer, Chief Privacy Officer and General Counsel.

Frameworks for risk-based testing

One of our biggest challenges was settling on a testing framework based on our risk assessment. Testing generative AI models proved to be much more challenging than testing traditional models due to the lack of “ground truth.” Traditional AI models produce predetermined objectively correct answers, such as the solution to a math equation. GenAI produces new, often subjective content. Evaluating this output involves human judgment, subjective criteria guidelines, and/or comparisons against existing data or benchmarks. Additionally, different classes of use cases required unique testing approaches based on the specific sets of risks. We developed use-case-specific testing matrixes across a wide range of model types and use cases like the one below.

Example use case testing processes and metrics

Figure 2: Example use case testing metrics for a GenAI-enabled summarization use case

Model Edge made operationalization of this testing framework possible. Its open API and data explorer feature — which helps users explore, analyze and visualize data — connect to source code and databases, pull in testing results, and populate testing and validation reports with charts, tables and descriptions to accelerate the process.

Monitoring and reporting

Ongoing monitoring of AI system risks and performance post-implementation is a critical task made more important by the constantly evolving nature of GenAI systems. Performance drift can occur rapidly. Monitoring results and implementing effective controls is crucial to keep outputs predictable. Therefore, we next determined what needed to be monitored and how to do that by looking at specific use cases. As illustrated in the above table, our testing metrics had defined thresholds typically determined by model outcome variability and business use criticality. These served as leading indicators of potential risk events, indicating what needed further investigation.

Model Edge can help automate the monitoring process and send alerts to model owners when a breach occurs that may require investigation or escalation.

Adoption and maintenance

Our product is only successful if customers use it, so we wanted to make the Responsible AI process efficient yet effective. To address this, we took two approaches. First, we knew that a big bang approach, where we implement the process all at once, could be overwhelming and not yield the desired results. We wanted stakeholders to witness early wins and value. So, we adopted a concentric model approach, starting with a small scope of high-risk models and gradually expanding to more models across the enterprise and lower-risk tiers and applications. Second, we adjusted the number of steps and clicks to streamline the user experience for model owners and users who were less technically inclined, allowing them to seamlessly navigate the information capture process for our risk assessment, testing and monitoring.

Like any journey, success lies in the path taken, not just the destination reached. We are thrilled to have taken the first steps in our journey to operationalize Responsible AI and are eager to share our experiences to help other organizations accelerate their own journeys.

Authors

Vikas Agarwal

Financial Services Risk & Regulatory Leader and Risk Technology Leader, PwC

Olga Harris

Financial Services Risk & Regulatory Principal, PwC

Jason Dulnev

Financial Services Risk & Regulatory Principal, PwC

Ilana Golbin Blumenfeld

PwC Labs, US Responsible AI Research Leader, PwC

Learn more

Model Edge: This digital platform helps internal and external stakeholders better understand your models, making it easier to identify bias, detect model degradation and demonstrate more effectively. Advanced models become transparent and explainable, enabling stakeholders across the enterprise.

Related insights for Model Edge

Explore our products

Stay read for new risks and remain compliant with products and technologies designed by industry experts — and built for your needs. Our consultants are here to help you keep your business protected and prepared so you can focus on what's next.

View products